Uniprocessor Garbage Collection Techniques

Overview

This page contains notes on the paper "Uniprocessor Garbage Collection Techniques" by Paul R. Wilson [Wil92].

§ 2 Basic Garbage Collection Techniques

§ 2.1 Reference Counting

Pros

- Incremental nature of operations makes it suitable for real time applications.

- Space efficient, able to operate with very low space overhead.

- Garbage typically collected immediately, which can be beneficial for object finalizers.

- May improve locality of reference.

- Easier to implement than full gc.

- Easier to reason about object lifetimes.

Cons

- Difficult to make efficient.

- Cost of collection is generally proportional to amount of work done by the program, with a large constant of proportionality.

- E.g., if a variable is reassigned from one pointer to another, both pointers ref counts must be updated.

- Short lived stack variables can incur a large overhead if args are

incremented / decremented even for short lived functions. One

solution is deferred reference counting [DB76].

- "Much of this cost can be optimized away by special treatment of local variables [DB76]. Rather than adjusting reference counts and reclaiming objects whose counts become zero, references from the local variables are not included in this bookkeeping most of the time… Garbage collection can only be done when references from the stack are taken into account."

- There is also the cost of reclamation: "It is difficult to make these reclamation operations take less than serveral instructions per object, and the cost is therefore proportional to the number of objects allocated by the running program."

- Must deal with cycles.

- ref counting is conservative.

- ref counting systems often include some other kind of collector to deal with cyclic structures.

- Other techniques are generally more efficient and reliable.

§ 2.2 Mark-Sweep Collection

Pros

- Reasonably simple to implement.

- With the right optimizations, performance can be comparable to copy collectors.

Cons

- Fragmentation

- Mitigated by keeping separate free lists for objects of different sizes and merging adjacent free space.

- Must decide whether to alloc new memory for small objects or split up large contiguous blocks.

- Cost of collection is proportional to the size of the heap.

- Can be mitigated by using a bitmap to track live objects in the heap. If live objects are clustered, then the cost of marking may be greatly reduced.

- Poor locality of reference due to fragmentation.

- Not as terrible as it may at first seem, since groups of related objects are often allocated/destroyed together.

§ 2.3 Mark-Compact Collection

Pros

- Avoids fragmentation problems of mark-sweep.

- Allocation can be done as pointer increment.

- Easy to allocate different sized objects.

Cons

- Potentially slow due to multiple passes over the heap.

§ 2.4 Copying Garage Collection

A Simple Copying Collector: "Stop-and-Copy" Using Semispaces

See also: Semispace collector [FY69]. Cheney algorithm [Che70].

- Pros

- All the advantages of Mark-Compact (no fragmentation, fast alloc, etc.).

- Integrate tracing and copying phases, so most objects only need to be traversed once.

- Work done proportional only to amount of live data (not heap size)

- Cons

- Requires double the heap space.

- Breadth-first approach of Cheney algorithm may result in poor locality of reference.

Efficiency of Copying Collection

Since gc work is proportional to the number of live objects only and not heap size, one way to make copy collection more efficient is to increase the heap size, which allows more garbage to be generated before gc is required.

§ 2.5 Non-Copying Implicit Collection

Recently, Baker [Bak92] has proposed a new kind of non-copying collector that with some of the efficiency advantages of a copying scheme. Baker's insight is that in a copying collector, the "spaces" of the collector are really just a particular implementation of sets. Another implementation of sets could do just as well, provided that it has similar performance characteristics. In particular, given a pointer to an object, it must be easy to determine which set it is a member of; in addition, it must be easy to switch the roles of the sets, just fromspace and tospace roles are exchanged in copy collector.

The basic idea is that only one heap exists, and blocks are linked in a doubly-linked list, and each block has a flag that indicates which "space" it belongs to. At gc time, live objects are unlinked from the list and their space flag is flipped to indicate they have been "moved".

Pros

- Tracing cost for large objects is lower than traditional copying collector (since objects aren't copied).

- Since objects aren't moved, pointers don't need to be updated.

Cons

- Fragmentation

- Slightly higher per-object constant factor.

§ 2.6 Choosing Among Basic Techniques

Three basic cost components:

- Initial work required at each collection, such as root set scanning.

- Work done per unit of allocation.

- Work done during garbage detection (tracing).

Basically: there is no clear winner among the simple collectors presented above. It depends on how your program is allocating memory, and on various constant factors for a particular implementation.

§ 2.7 Problems with a Simple Garbage Collector

- Poor locality of allocation and reclamation cycles will generally cause paging. For example, if all pages in the heap must be allocated before a reclamation cycle begins. For this reason, it's generally not worthwhile to have a heap that's too large to fit in physical memory.

- By the time memory is reclaimed and reused, it's likely to have been paged out.

- Compaction is helpful, but generally "too little, too late." A simpleminded semispace copying collector is like to have more locality problems than mark-sweep simply because the copy collector uses twice the memory.

- GC pauses are annoying at best.

§ 3 Incremental Tracing Collectors

The difficulty with incremental tracing is that while the collector is tracing out the graph of reachable data structures, the graph may change—the running program may mutate the graph while the collector "isn't looking."… An incremental scheme must have some way of keeping track of the changes to the graph of reachable objects, perhaps re-computing parts of its traversal in the face of those changes.

An important characteristic of incremental techniques is their degree of conservatism with respect to changes made by the mutator during garbage collection.

§ 3.1 Tricolor Marking

Garbage collection algorithms can be described as a process of traversing the graph of reachable objects and coloring them. The objects subject to garbage collection are conceptually colored white, and by the end of collection, those that will be retained must be colored black…

the mutator can't be allowed to change things "behind the collector's back" in such a way that the collector will fail to find all reachable objects.

To understand and prevent such interactions between the mutator and the collector, it is useful to introduce a third color, grey, to signify that an object has been reached by the traversal, but that its descendants may not have been…

The importance of this invariant is that the collector must be able to assume that it is "finished with" black objects, and can continue to traverse grey objects and move the wavefront forward. If the mutator creates a pointer from a black object to a white one, it must somehow coordinate with the collector, to ensure that the collector's bookkeeping is brought up to date.

Incremental approaches

Two basic approaches to coordinating the mutator and collector:

- Read barrier

- Detects when mutator attempts to read a pointer to a white object and immediately colors the object grey.

- Write barrier

- When mutator attempts to write to a pointer, the

write is trapped or recorded. To foil the gc's marking traversal, the

mutator must 1) write a pointer to a white object into a black object,

and 2) destroy the original pointer to the white object before the

collector sees it. Write barriers fall into two basic categories

depending on which of the above conditions they address:

- Snapshot-at-beginning collectors ensure that the second condition can not happen—rather than allow pointer to be overwritten, they are first saved so that the collector can find them. Thus no paths to white objects can be broken without providing an alternative path for the collector to reach them.

- Incremental update collectors only record pointers to white objects that are stored into black objects (condition 1 above). When such a write is detected, the black object (or part of it) is reverted to grey. Alternatively, the pointed-to white object is immediately greyed.

§ 3.2 Baker's Incremental Copying

Baker's collector is a variation of the simple copying scheme in § 2.4 that uses a read barrier for coordination with the mutator.

Breadth-first copying proceeds interleaved with mutator operation. Any allocations done by the mutator are placed in the tospace, and therefore won't be gc'ed until the next cycle.

In order to ensure that all live objects are copied before new allocations exhaust tospace, gc is tied to allocations so that an incremental amount of gc is done for each allocation.

Additionally, the mutator is prevented from storing pointers into fromspace. If the mutator attempts to read a pointer to an object in fromspace, it's immediately copied to tospace and the pointer is updated.

The read barrier has the downside of being slow compared to a direct pointer access, since every pointer read must be checked and possibly redirected. Brook's proposed a variation where every object is accesses via an indirection pointer contained in the object. If the object lives in tospace, the object's indirection pointer points to itself. If the object is an obsolete copy in the fromspace, the indirection pointer points to the new version in tospace. Always accessing the pointer through indirection is slightly cheaper than conditional indirection, but still expensive compared to normal pointer access.

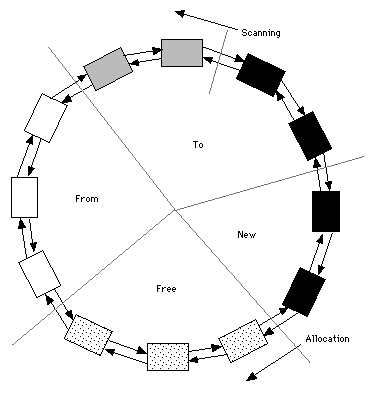

§ 3.3 The Treadmill

The Treadmill scheme is a combination of Baker's scheme from the previous section and the non-copying collector described in § 2.5.

The basic idea is that the freelist, from-space, to-space, and new-space are all linked together in a circular structure. At the start of gc, the new-space and to-space are both empty. Root objects are moved from the from-space list to the to-space list and marked grey. Scanning proceeds through the to-space marking scanned objects black. Allocation during gc happens in the new-space by following a pointer to the next block on the freelist. Newly allocated objects are immediately marked black. At the end of the gc cycle, the from-space can be appended to the freelist, and the new-space and to-space will become the new from-space on the next cycle.

§ 3.4 Snapshot-at-Beginning Write-Barrier Algorithms

When using a non-copying collector, there is no need for a read barrier. A write barrier can be used instead to prevent the mutator from overwriting a pointer to a live object.

Conceptually, at the beginning of GC, a virtual copy-on-write copy of the graph of reachable objects is made.

One simple and well-known snapshot algorithm is Yuasa's. If a location is written to, the overwritten value is first saved and pushed on a marking stack for later examination.

Pros

- Write barriers are much cheaper than read barriers on stock hardware, because pointer reads are much more common than pointer writes.

- Normal pointer dereferencing and comparison do not incur overhead.

Cons

- Yusasa's scheme is more conservative than Baker's. Not only will object's that are allocated during GC not get reclaimed until the next cycle, but no objects can be freed during GC (all overwritten pointers are preserved).

§ 3.5 Incremental Update Write-Barrier Algorithms

Best known algorithm due to Dijkstra et al. [DLM+78], which is similar to [Ste75], but simpler because it doesn't deal with compacting.

Rather than retaining everything that's live at the beginning of gc, incremental-update algorithms heuristically (and conservatively) attempt to retain all objects that are live at the end of gc. Objects that die during collection–and before being reached by marking traversal–are not traversed and marked.

Writes to pointers are trapped and the collector is notified if the mutator stores a pointer to a white object into a black object. The black object is then effectively greyed and re-scanned before gc terminates.

Thus, objects that become garbage during collection may be reclaimed at the end of the gc cycle, similar to Baker's read-barrier algorithm.

Incremental Update is less conservative than Baker or Yuasa's schemes w.r.t. newly allocated objects. In Baker's scheme, newly allocated objects are allocated "black", i.e. assumed to be live objects. In the Dijkstra et al. scheme, objects are allocated "white", and only marked when the set of roots is traversed.

Pros

- Garbage generated during gc can be reclaimed in the same cycle, rather than waiting for the next cycle.

- New objects are allocated white, allowing for fast reclamation of short-lived objects.

Cons

- There is a computational cost to allocating objects white, since you must then re-scan the root to determine which objects are still live (as opposed to allocating objects black and assuming they are reachable).

§ 3.6 Choosing Among Incremental Techniques

The choice of a read- or write-barrier scheme is likely to be made on the basis of the available hardware. Without specialized hardware support, a write-barrier appears to be easier to implement efficiently, because heap pointer writes are much less common than pointer traversals.

Appel, Ellis and Li [AEL88] use virtual memory (pagewise) access protection facilities as a coarse approximation of Baker's read barrier…

Of write barrier schemes, incremental update appears to be more effective than snapshot approaches…

Careful attention should be paid to write barrier implementation. Boehm, Demers and Shenker's incremental update algorithm uses virtual memory dirty bits as a coarse pagewise write barrier…

In a system with compiler support for garbage collection, a list of stored-into locations can be kept, or dirty bits can maintained (in software) for small areas of memory…

§ 4 Generational Garbage Collection

Given a realistic amount of memory, efficiency of simple copying garbage collection is limited by the fact that the system must copy all live data at a collection. In most programs in a variety of languages, most objects live a very short time, while a small percentatge of them live much longer.…

Even if garbage collections are fairly close together, separated by only a few kilobytes of allocation, most objects die before a collection and never need to be copied. Of the ones that do survive to be copied once, however, a large fraction survive through many collections. These objects are copied at every scavenge, over and over, and the garbage collector spends most of its time copying the same old objects repeatedly. This is the major source of inefficiency in simple garbage collectors.

Generational collection avoids much of this repeated copying by segregating objects into multiple areas by age, and scavenging areas containing older objects less often than the younger ones.

… The choice of copying or marking collection is essentially orthogonal to the issue of generation collection…

§ 4.2 Detecting Intergenerational References

In order for the generational scheme to work, it's necessary to be able to detect references from older generations to newer generations when scavenging, otherwise some live objects in the newer generation may be reclaimed, or else pointers to the object will not be updated when it's copied.

In the original generational scheme, no pointer in old memory may point directly to an object in new memory; instead it must point to a cell in an indirection table, which is used as part of the root set.

On stock hardware, the indirection table introduces an overhead similar to Baker's read barrier, in which case it might make more sense to use a pointer recording technique.

Rather than indirecting pointers from old objects to young ones, normal (direct) pointers are allowed, but the locations of such pointers are noted so that they can be found at scavenge time. This requires something like a write barrier; that is, the running program cannot freely modify the reachability graph by storing pointers into objects in older generation…

The important point is that all references from old to new memory must be located at scavenge time, and used as roots for the copying traversal…

As in an incremental collector, this use of a write barrier results in a conservative approximation of true liveness; any pointers from old to new memory are used as roots, but not all of these roots are necessarily live themselves.

Within a generational collector, the write barrier cost is likely to be the most expensive operation.

Several important questions regarding generational collectors remain:

- Advancement policy. How long must an object survive in one generation before it is advanced to the next?

- Heap organization. How should storage space be divided between and within generations? How does the resulting reuse pattern affect locality at the virtual memory level? At the cache level?

- Traversal algorithms. In a tracing collector, the traversal of live objects may have an important impact on locality. In a copying collector, objects are also reordered as they are copied. What affect does this have on locality, and what traversal yields the best results?

- Collection scheduling. For a non-incremental collector, how might we avoid disruptive pauses? Can we improve efficiency by opportunistic scheduling? Can this be adapted to incremental schemes to reduce floating garbage?

- Intergenerational references. What is the best way to detect intergenerational references?

References

This is not the full list of references from the paper, just the ones that are mentioned elsewhere in this document.

- [AEL88]

- A. W. Appel, J. R. Ellis, and K. Li. 1988. Real-time concurrent collection on stock multiprocessors. In Proceedings of the ACM SIGPLAN 1988 conference on Programming language design and implementation (PLDI ’88). Association for Computing Machinery, New York, NY, USA, 11–20. DOI:https://doi.org/10.1145/53990.53992

- [Bak92]

- Henry G. Baker. 1992. The treadmill: real-time garbage collection without motion sickness. SIGPLAN Not. 27, 3 (March 1992), 66–70. DOI:https://doi.org/10.1145/130854.130862

- [Che70]

- C. J. Cheney. 1970. A nonrecursive list compacting algorithm. Commun. ACM 13, 11 (Nov 1970), 677–678. DOI:https://doi.org/10.1145/362790.362798

- [DB76]

- L. Peter Deutsch and Daniel G. Bobrow. 1976. An efficient, incremental, automatic garbage collector. Commun. ACM 19, 9 (Sept. 1976), 522–526. DOI:https://doi.org/10.1145/360336.360345

- [DLM+78]

- Edsger W. Dijkstra, Leslie Lamport, A. J. Martin, C. S. Scholten, and E. F. M. Steffens. 1978. On-the-fly garbage collection: an exercise in cooperation. Commun. ACM 21, 11 (Nov. 1978), 966–975. DOI:https://doi.org/10.1145/359642.359655

- [FY69]

- Robert R. Fenichel and Jerome C. Yochelson. 1969. A LISP garbage-collector for virtual-memory computer systems. Commun. ACM 12, 11 (Nov. 1969), 611–612. DOI:https://doi.org/10.1145/363269.363280

- [Ste75]

- Guy L. Steele. 1975. Multiprocessing compactifying garbage collection. Commun. ACM 18, 9 (Sept. 1975), 495–508. DOI:https://doi.org/10.1145/361002.361005

- [Wil92]

- Paul R. Wilson. 1992. Uniprocessor Garbage Collection Techniques. In Proceedings of the International Workshop on Memory Management (IWMM ’92). Springer-Verlag, Berlin, Heidelberg, 1–42.